According to law enforcement and intelligence agencies, encryption should come with a backdoor. It’s not a new policy position—it dates to the Crypto Wars of the 1990s—but it’s gaining new Beltway currency.

Cryptographic backdoors are a bad idea. They introduce unquantifiable security risks, like the recent FREAK vulnerability. They could equip oppressive governments, not just the United States. They chill free speech. They impose costs on innovators and reduce foreign demand for American products. The list of objections runs long.

I’d like to articulate an additional, pragmatic argument against backdoors. It’s a little subtle, and it cuts across technology, policy, and law. Once you see it, though, you can’t unsee it.

Cryptographic backdoors will not work. As a matter of technology, they are deeply incompatible with modern software platforms. And as a matter of policy and law, addressing those incompatibilities would require intolerable regulation of the technology sector. Any attempt to mandate backdoors will merely escalate an arms race, where usable and secure software stays a step ahead of the government.

The easiest way to understand the argument is to walk through a hypothetical. I’m going to use Android; much of the same analysis would apply to iOS or any other mobile platform.

An Android Hypothetical

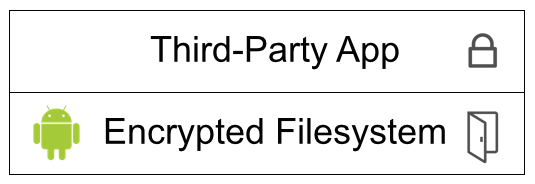

Imagine that Google rolls over and backdoors Android. For purposes of this post, the specifics of the backdoor architecture don’t matter. (My recent conversations with federal policymakers have emphasized key escrow designs, and the Washington Post’s reporting is consistent.) Google follows the law, and it compromises Android’s disk encryption.

But there’s an immediate problem: what about third-party apps? Android is, by design, a platform. Google has deliberately made it trivial to create, distribute, and use new software. What prevents a developer from building their own secure data store on top of Android’s backdoored storage? What prevents a developer from building their own end-to-end secure messaging app?

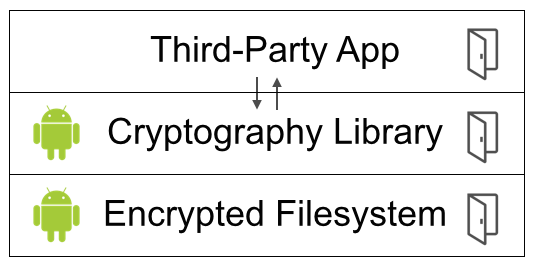

The obvious answer is that Google can’t stop with just backdooring disk encryption. It has to backdoor the entire Android cryptography library. Whenever a third-party app generates an encrypted blob of data, for any purpose, that blob has to include a backdoor.

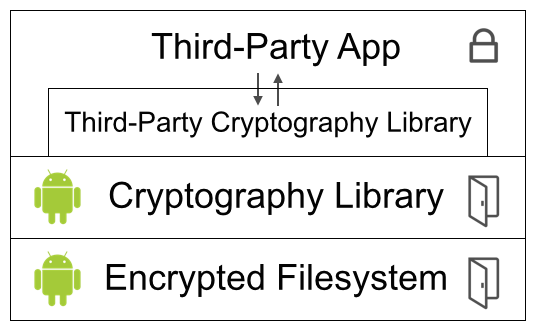

Now there’s another problem: what about third-party apps that don’t rely on the Android cryptography library? It’s already common practice to use alternatives. Maybe the government could require some commercial libraries, like Facebook Conceal, to incorporate backdoors. Federal authorities certainly won’t be able to reach free, open source, international1 libraries, like OpenSSL, NaCl, or Bouncy Castle. The jurisdictional obstacles to regulation are insurmountable. What’s more, there would be serious First Amendment issues, since a cryptography library is largely math (i.e. speech).

So, how can the government make sure that Android apps use only backdoored libraries? Direct regulation of app developers wouldn’t be enough, since many developers are outside the reach of the American legal system. And, if Google wanted, it could allow developers to submit apps anonymously.2

The solution would have to be intermediary liability, where Google is compelled to play gatekeeper.

One option: require Google to police its app store for strong cryptography. Another option: mandate a notice-and-takedown system, where the government is responsible for spotting secure apps, and Google has a grace period to remove them. Either alternative would, of course, be entirely unacceptable to the technology sector—the DMCA’s notice-and-takedown system is widely reviled, and present federal law (CDA 230) disfavors intermediary liability.

This hypothetical is already beyond the realm of political feasibility, but keep going. Assume the federal government sticks Google with intermediary liability. How will Google (or the government) distinguish between apps that have strong cryptography and apps that have backdoored cryptography?

There isn’t a good solution. Auditing app installation bundles, or even requiring developers to hand over source code, would not be sufficient. Apps can trivially download and incorporate new code. Auditing running apps would add even more complexity. And, at any rate, both static and dynamic analysis are unsolved challenges—just look at how much trouble Google has had identifying malware and knockoff apps.

Continue with the hypothetical, though. Imagine that Google could successfully banish secure encryption apps from the official Google Play store. What about apps that are loaded from another app store? The government could feasibly regulate some competitors, like the Amazon Appstore. How, though, would it reach international, free, open source app repositories like F-Droid or Fossdroid? What about apps that a user directly downloads and installs (“sideloads”) from a developer’s website?

The only solution is an app kill switch.3 (Google’s euphemism is “Remote Application Removal.”) Whenever the government discovers a strong encryption app, it would compel Google to nuke the app from Android phones worldwide. That level of government intrusion—reaching into personal devices to remove security software—certainly would not be well received. It raises serious Fourth Amendment issues, since it could be construed as a search of the device or a seizure of device functionality and app data.4 What’s more, the collateral damage would be extensive; innocent users of the app would lose their data.

Designing an effective app kill switch also isn’t so easy. The concept is feasible for app store downloads, since those apps are tagged with a consistent identifier. But a naïve kill switch design is trivial to circumvent with a sideloaded app. The developer could easily generate a random application identifier for each download.5

Google would have to build a much more sophisticated kill switch, scanning apps for prohibited traits. Think antivirus, but for detecting and removing apps that the user wants. That’s yet another unsolved technical challenge, yet another objectionable intrusion into personal devices, and yet another practice with constitutional vulnerability.

Stick with the hypothetical, and assume the app kill switch works.6 Secure native apps are gone.

What about browser-based apps? It’s possible to build a secure data store or messaging app that loads entirely over the web, from the user interface to the cryptography library, and gets saved on the user’s device. The requisite web standards are already in place. This is not a good engineering design, to be clear—it should only be a last resort—but it is possible. And it circumvents the Android cryptography library, Google Play restrictions, and the app kill switch.

That leaves just one option.7 In order to prevent secure data storage and end-to-end secure messaging, the government would have to block these web apps. The United States would have to engage in Internet censorship.

Are Criminals Really That Smart?

It’s easy to spot the leading counterargument to this entire line of reasoning. I’ve heard it firsthand from both law enforcement and intelligence officials.

“Sure,” the response goes, “it’s impossible to entirely block secure apps. Sophisticated criminals will always have good operational security. But we don’t need complete backdoor coverage. If we can significantly increase the barriers to secure storage and messaging, that’s still a big win. Most criminals really aren’t so smart.”

That response isn’t convincing. We’re already talking about the smart criminals here.8 Android and iOS continue to allow for government access to data by default.

In order to believe that backdoors will work,9 we have to believe there is a set of criminals who are smart enough to do all of the following:

- Disable default device backups to the cloud. Otherwise, the government can obtain device content directly from the cloud provider.

- Disable default device key backups to the cloud, if the government retrieves the device. Otherwise, the government can obtain the key from the cloud, and decrypt the device.

- Disable default device biometric decryption, if the government retrieves the device and detains its owner. Otherwise, the government can compel the owner to decrypt the device.

- Avoid sending incriminating evidence by text message, email, or any other communications system that isn’t end-to-end secure. Otherwise, the government can prospectively intercept messages, and can often obtain past communications.

- Disable default cloud storage for each app that contains incriminating evidence, such as a photo library. Otherwise, the government can obtain the evidence directly from the cloud provider.

That’s quite a tall order. And yet, these same criminals must not be smart enough to do any of the following:

- Install an alternative storage or messaging app.

- Download an app from a website instead of an official app store.

- Use a web-based app instead of a native mobile app.

It’s difficult to believe that many criminals would fit the profile.

Will These Apps Really Get Built?

There’s a slightly different counterargument that I’ve also heard. It’s less common, and it focuses on app developers rather than criminals.

“Sure,” the thinking runs, “it’s impossible to entirely block secure apps. But we don’t need a complete technology ban. We just need to disincentivize building these apps, by making them more difficult to design, distribute, and monetize. The best developers will walk away, and the best secure apps will disapper. That would still be a big win.”

Not so fast. Many secure software developers aren’t incentivized by financial reward. In fact, much of the best secure software is free, open source, and noncommercial. And for those developers who do wish to monetize, there are a plethora of viable options.10

As for app design and distribution, that was the discussion earlier. Unless the government is prepared to adopt technology sector regulation that is politically infeasible, inconsistent with prior policy, and possibly unconstitutional, it just can’t do much to obstruct secure apps.

Concluding Thoughts

The frustration felt by law enforcement and intelligence officials is palpable and understandable. Electronic surveillance has revolutionized both fields, and it plays a legitimate role in both investigating crimes and protecting national security. The possibility of losing critical evidence, even if rare, should be cause for reflection.

Cryptographic backdoors are, however, not a solution. Beyond the myriad other objections, they pose too much of a cost-benefit asymmetry. In order to make secure apps just slightly more difficult for criminals to obtain, and just slightly less worthwhile for developers, the government would have to go to extraordinary lengths. In an arms race between cryptographic backdoors and secure apps, the United States would inevitably lose.

Image credits: door, lock, Android bouncer, and Android app kill switch.

1. By international, I mean a project that has international contributors and could easily be coordinated from a foreign location.

2. Google could, for instance, implement a system like SecureDrop for app submissions. I don’t expect that it’s likely, but it is feasible. The government counter-move would be to require Google to adopt a real-name policy for app submissions. Given how critical the United States has been of foreign governments that have imposed similar policies (e.g. China), that seems even more unlikely.

3. I suppose another direction would be to entirely forbid alternative app stores and sideloaded apps. Unless the federal government is prepared to kibosh open platforms, and to require that Android be a walled garden like iOS, that’s even more of a non-starter.

4. There could also be a Fifth Amendment (takings) or Second Amendment (self-defense) challenge. I don’t think the caselaw supports the former, though, and there isn’t yet much caselaw on the latter.

5. Alternatively, the user could be directed to compile the app for themselves with Google’s free Android development kit.

6. Another circumvention of the kill switch, albeit one that’s somewhat more challenging for users, is installing a variant of Android. The popular CyanogenMod operating system, for instance, has particularly good privacy and security features. It likely wouldn’t honor government cryptography takedown requests. The only solution: lock Android users into the pre-installed operating system. That won’t sit well with tinkerers, to be sure. And it puts users at risk—device vendors and carriers are notoriously slow to push out Android updates, so variants of Android can be a more secure alternative.

7. The government could, alternatively, demand an ability to remotely observe device usage. At that point, though, the conversation is much more about government hacking than cryptographic backdoors.

8. There is, presumably, a small subset of not-so-smart criminals who stumble into a secure configuration. The behavioral economics work in computer security and privacy has consistently found that defaults dictate outcomes. There’s even some (indirect) empirical evidence that the overwhelming majority of iOS users have backups enabled, since the overwhelming majority of iOS users quickly receive major OS updates.

9. The absence of a backdoor could slightly delay prospective government access to data. In order to intercept future iMessages, for instance, the government would have to wait until the target’s iPhone backed up to iCloud. That seems a minute investigative burden, and at any rate, law enforcement agencies rarely engage in prospective content interception. (It necessitates a wiretap order, which goes far beyond the requirements of an ordinary warrant.)

10. A range of advertising models come to mind. Just look at the booming ecosystem of questionable “file locker” websites.